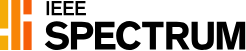

TossingBot: Learning to Throw Arbitrary Objects

with Residual Physics

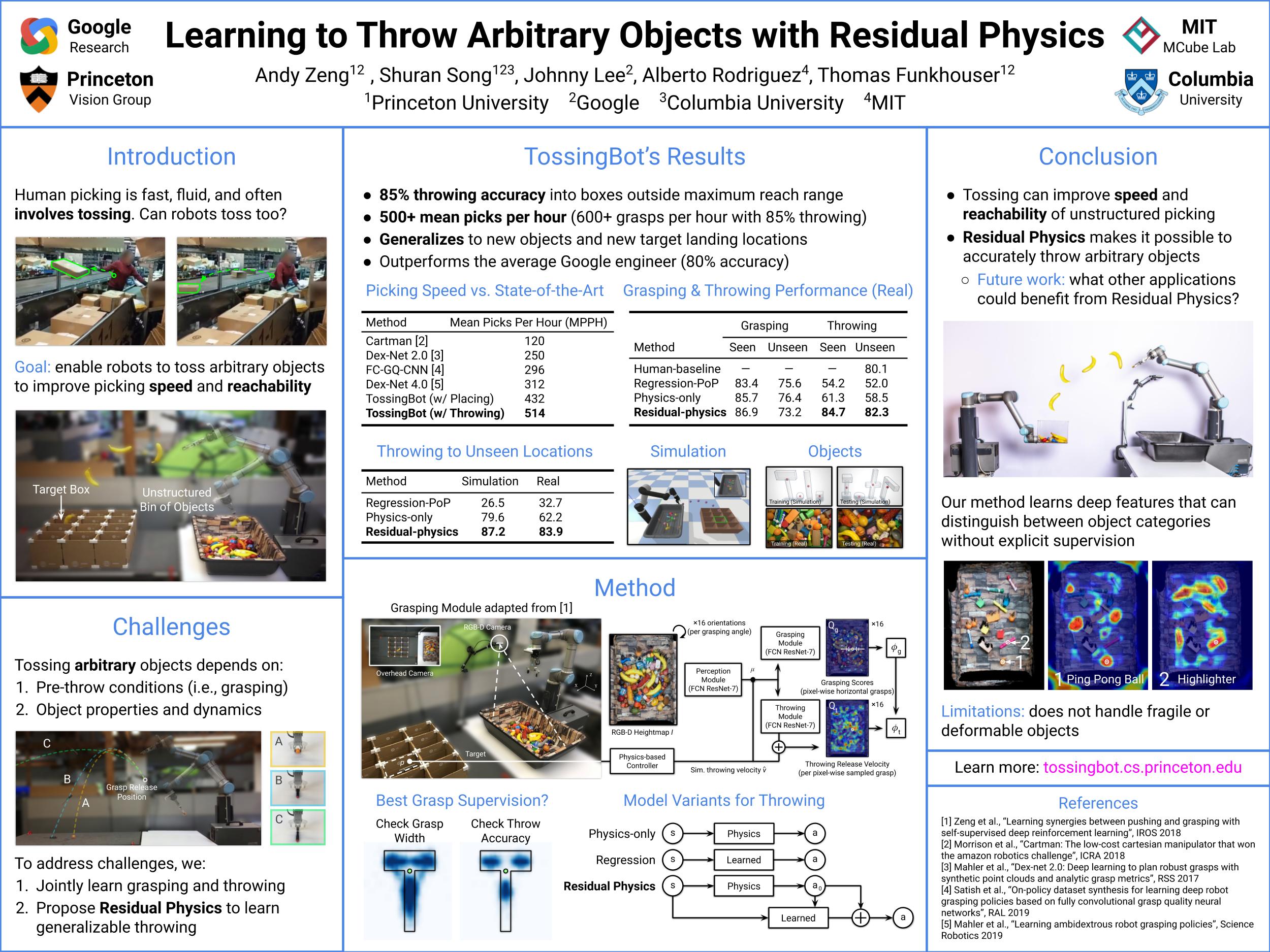

Throwing is an excellent means of exploiting dynamics to increase the capabilities of a manipulator. In the case of pick-and-place for example, throwing can enable a robot arm to rapidly place objects into selected boxes outside its maximum kinematic range — improving its physical reachability and picking speed. However, precisely throwing arbitrary objects in unstructured settings presents many challenges: from acquiring reliable pre-throw conditions (e.g. initial pose of object in manipulator) to handling varying object-centric properties (e.g. mass distribution, friction, shape) and dynamics (e.g. aerodynamics).

In this work, we propose an end-to-end formulation that jointly learns to infer control parameters for grasping and throwing motion primitives from visual observations (images of arbitrary objects in a bin) through trial and error. Within this formulation, we investigate the synergies between grasping and throwing (i.e., learning grasps that enable more accurate throws) and between simulation and deep learning (i.e., using deep networks to predict residuals on top of control parameters predicted by a physics simulator). The resulting system, TossingBot, is able to grasp and throw arbitrary objects into boxes located outside its maximum reach range at 500+ mean picks per hour (600+ grasps per hour with 85% throwing accuracy); and generalizes to new objects and target locations.

Highlights

Paper

Latest version (Mar 27, 2019): arXiv:1903.11239 [cs.RO] or here.

To appear at Robotics: Science and Systems (RSS) 2019

★ Best Systems Paper Award, RSS ★

Team

Bibtex

@article{zeng2019tossingbot,

title={TossingBot: Learning to Throw Arbitrary Objects with Residual Physics},

author={Zeng, Andy and Song, Shuran and Lee, Johnny and Rodriguez, Alberto and Funkhouser, Thomas},

booktitle={Proceedings of Robotics: Science and Systems (RSS)},

year={2019} }

Introductory Video (with audio)

Technical Summary Video (with audio)

Acknowledgements

Special thanks to Ryan Hickman for valuable managerial support, Ivan Krasin and Stefan Welker for fruitful technical discussions, Brandon Hurd and Julian Salazar and Sean Snyder for hardware support, Chad Richards and Jason Freidenfelds for helpful feedback on writing, Erwin Coumans for advice on PyBullet, Laura Graesser for video narration, and Regina Hickman for photography. This work was also supported by Google, Amazon, Intel, NVIDIA, ABB Robotics, and Mathworks.

Contact

If you have any questions, please feel free to contact Andy Zeng

Tuesday, March 26, 2019

Posted by Andy Zeng